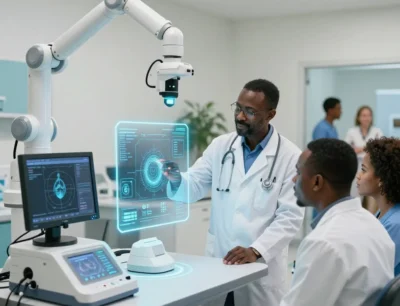

Inside the AI Race That’s Reshaping Global Healthcare

Anthropic and OpenAI launch healthcare AI tools just days apart. Is this a new era in medicine—or the start of an AI a

Notice: Test mode is enabled. While in test mode no live donations are processed.

Anthropic and OpenAI launch healthcare AI tools just days apart. Is this a new era in medicine—or the start of an AI a

Utah tests AI to renew prescriptions without doctors. A bold step in healthcare automation with real-world patients and

India’s first government-run AI clinic opens in Noida — transforming public healthcare with AI diagnostics, genomics